Breakthrough in brain-computer interface gives voice to the voiceless

Imagine saying a sentence using only your thoughts. That's exactly what scientists in Shanghai are working to make possible.

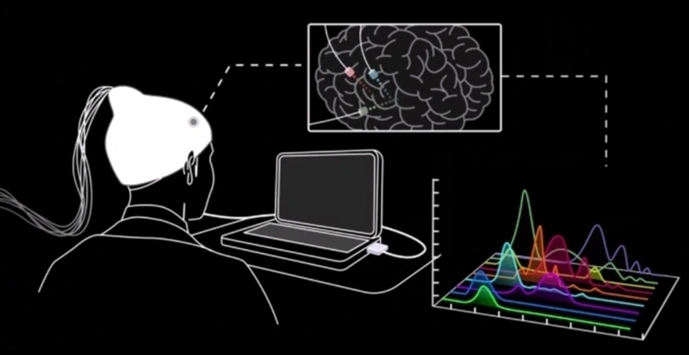

A collaborative study by the Shanghai Yansi Institute of Brain-like Artificial Intelligence and Huashan Hospital has developed a brain-computer interface (BCI) system that decodes intended Chinese speech with unprecedented accuracy.

This groundbreaking advancement, now in its clinical trial phase, holds great potential for restoring communication in patients with speech impairments caused by amyotrophic lateral sclerosis (ALS), stroke, and other neurological conditions.

In the clinical trial, electrodes were implanted in the brains of 10 epilepsy patients. After just 100 minutes of training, where participants read aloud 54 Chinese characters, the system's electroencephalogram (EEG)-based large language model learned to map their brain activity. It did so by breaking down the Chinese characters spoken by the patients into their phonetic components, initial consonants and vowels, and precisely identifying the corresponding neural signals.

Trained on the 54 characters, the model can decode 1,951 common Chinese characters, achieving a 36-fold extrapolation rate, and translate a person's intended speech into text in real time.

Unlike the English language, which has fewer than 50 phonemes, Chinese involves over 400 phoneme variations due to its combinations of initial consonants, vowels, and tones, making it more challenging to decode brain signals related to the Chinese language.

The research team's large language model has overcome this barrier, achieving a recognition accuracy of more than 83 percent for initial consonants in Chinese and over 84 percent for vowels.

Professor Li Meng, chief scientist at the Yansi Institute, believes this breakthrough in BCI technology will enable the precise and efficient translation of human thoughts into text.

In addition, it paves the way for future applications such as controlling devices with just thoughts, interacting within the metaverse, and even creating a painting directly from one's imagination.

Mao Ying, president of Huashan Hospital, explained that the EEG-based model powering the trial was made possible thanks to a high-quality intracranial EEG database developed at Huashan Hospital.

With support from the database, which is currently the world's largest human intracranial EEG database, the team at the Yansi Institute has independently developed a large language model capable of reading brain signals with high precision, according to Li.

Source: Jiefang Daily